Collective Operations

These are operations called across devices to perform operations on data which is distributed and/or shared across devices. Here, device stands for a GPU, it could either be within a single machine or a cluster.

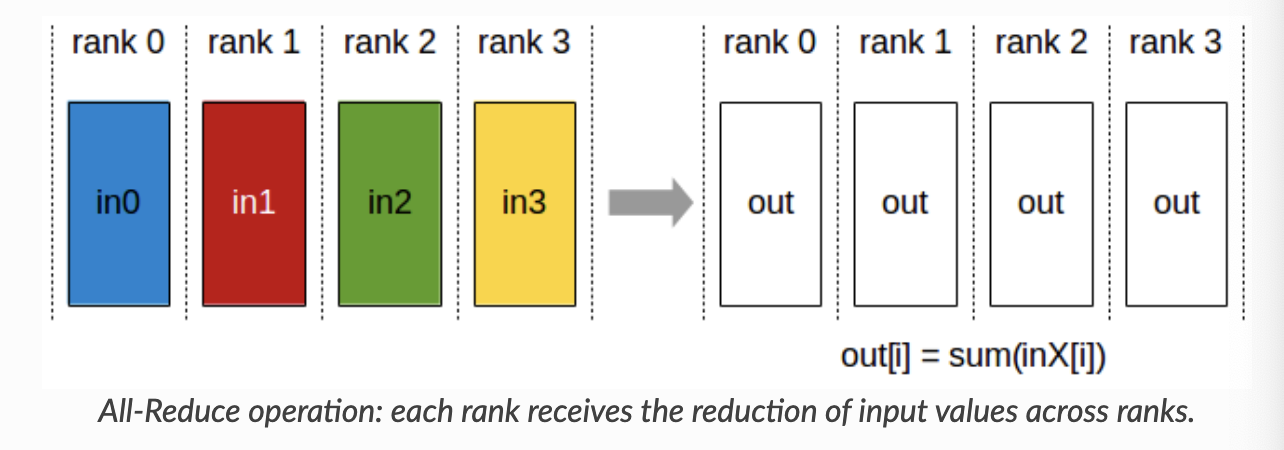

AllReduce

Reductions on data (like sum, min, max) across devices and stores the result in all the devices

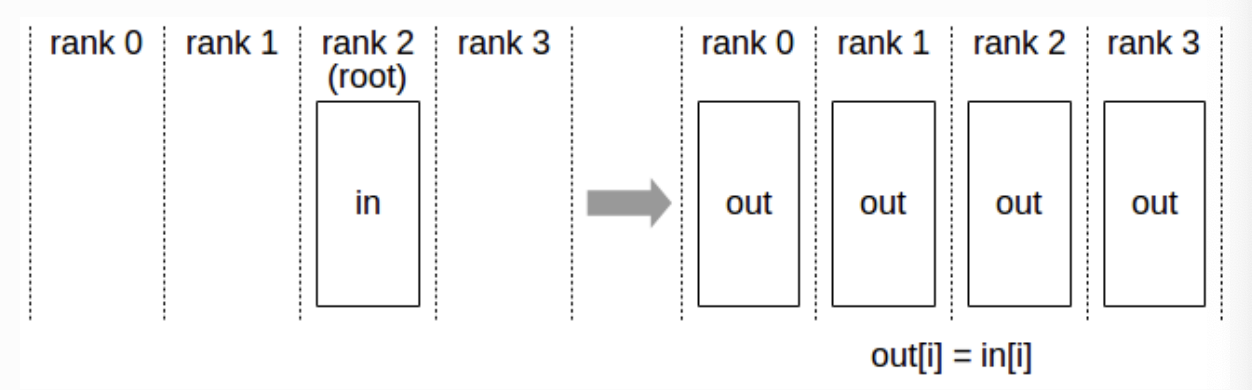

Broadcast

Copies some data and shares it with every connected device.

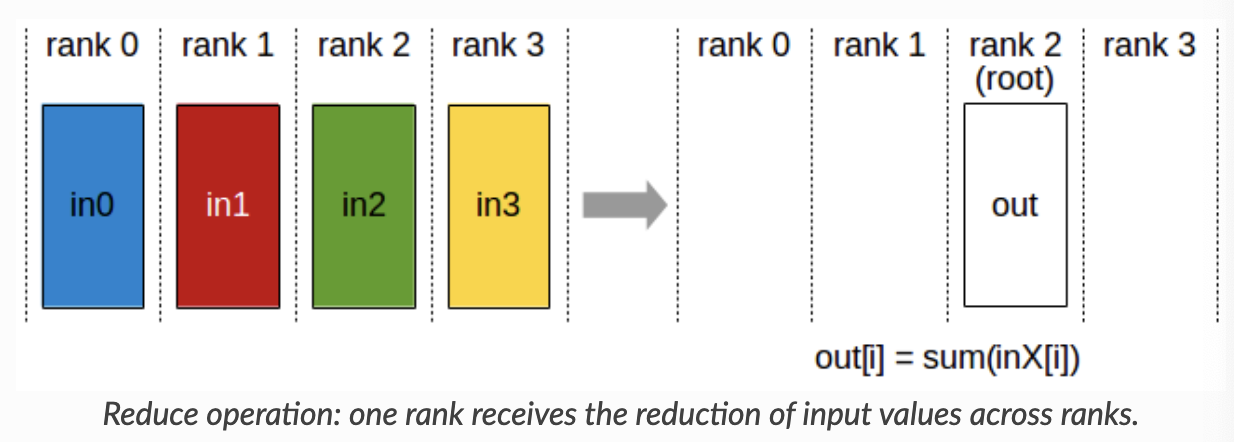

Reduce

Performs the reduction and stores it in a single specified device.

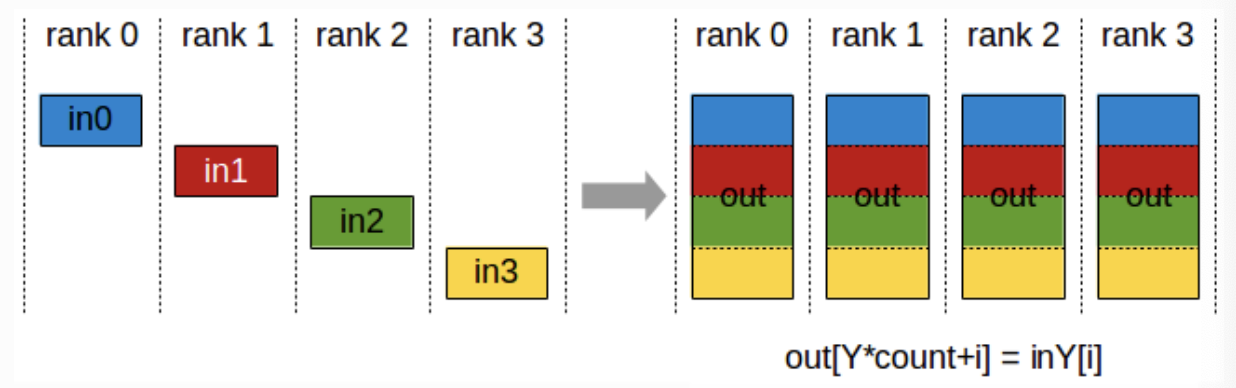

AllGather

Gathers individual values from different devices and concatenates them into a vector and stores it in every device.

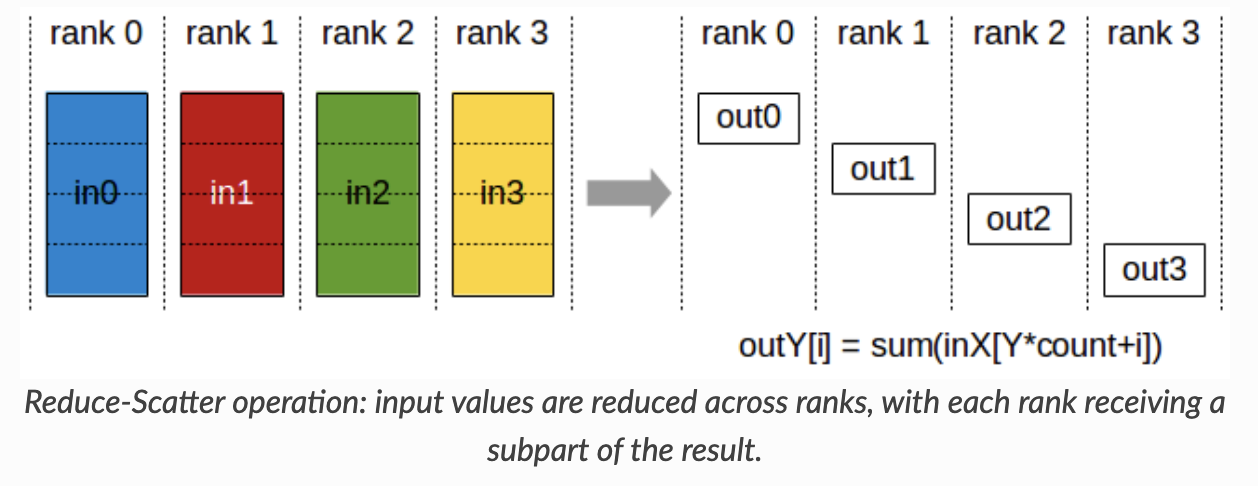

ReduceScatter

If each device have different vectors of data, then ReduceScatter performs a reduction across different indices of the vector stored in the different devices.

SImple Words,

Out0 -> Reduction with the 0th element in in0, in1, in2 and in3.

Out1 -> Reduction with the 1st element in in0, in1, in2 and in3.

...So on

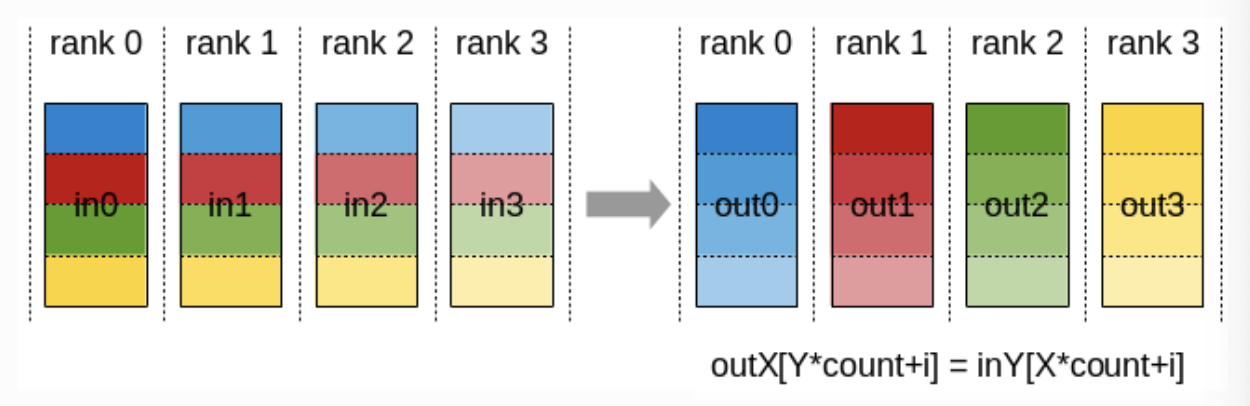

AlltoAll

Every Device exchanges data with every other device

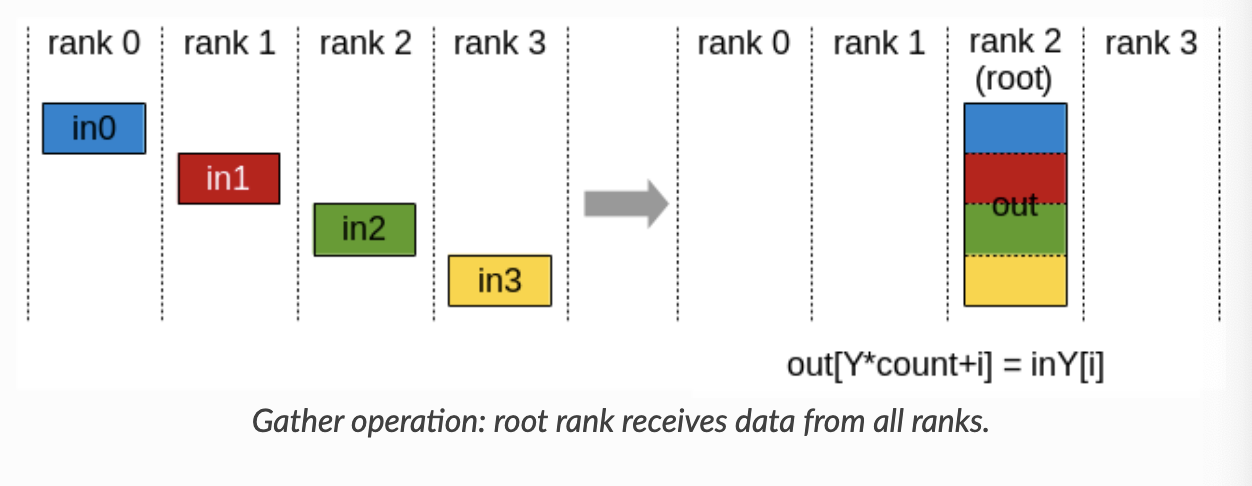

Gather

Gathers individual values from different devices and concatenates them into a specific device.

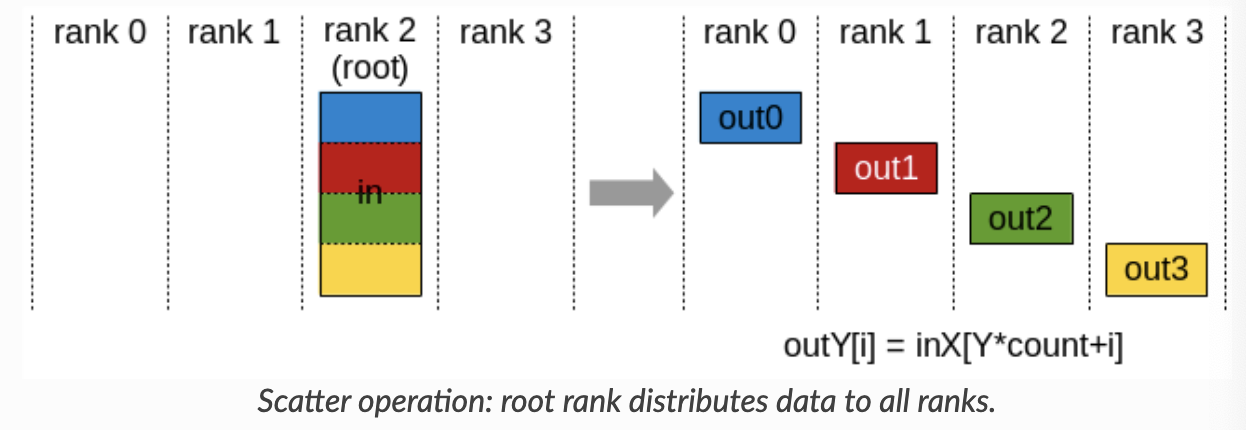

Scatter

Distributes a vector across all the available devices, with each devices getting an equal share.

Note:

All the images in this article were from https://docs.nvidia.com/deeplearning/nccl/user-guide/docs/usage/collectives.html.